First of all, I'd like to thank the anonymous poster who brought this paper (

Zhang et al, Model-based Analysis of ChIP-Seq (MACS), Genome Biology) to my attention, and Xue, who passed me the link to it. It didn't appear in Google until this morning, for me, so knowing where it was beforehand was very helpful.

Unlike the other ChIP-Seq software papers that I reviewed, I think this one is the first that I feel was really well done - and it's a pretty decent read too. My biggest complaint is just that we had printer issues this morning, so it took me nearly 2 hours to get it printed out. (-;

Ok. Before I jump into technical details, I just have to ask one (rhetorical) question to Dr. Johnson: is that really your work email address? (-:

MACS appears to be an interesting application. Feature for feature, it competes well with the other applications in it's group. In fact, from the descriptions given, I think it does almost the identical calculations that are implemented in FindPeaks 3.1. (And yes, they seem to have used 3.1+ in their comparisons, though it's not explicitly stated which version was used.)

There are two ways in which MACS differs from the other applications:

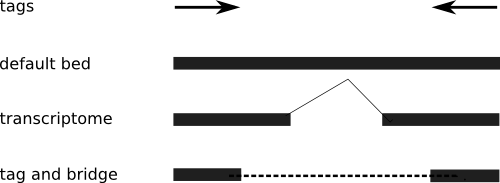

- The use of a "shift"(referred to as an "adaptive fragment length" in FindPeaks)

- the use of controls in a more coherent manner.

The first is interesting because it's one of the last open questions in ChIP-Seq data: how do you determine the actual length of a single end tag?

Alas, while FindPeaks had a very similar module for calculating fragment lengths in version 3.1.0, it was never released to the public. Here, MACS beats FindPeaks to the punch, and has demonstrated that an adaptive fragment size can work. In fact their solution is quite elegant: identify the top X peaks, then calculate the distance from the peak that the ends normally occur. I think there's still room for improvement, but this has now opened the Pandora's box of fragment length estimation for everyone to get their hands dirty and play with. And it's about time.

The second improvement is their handling of controls. Several applications have moved "control" handling into the core algorithms, while FindPeaks has chosen to keep it part of the post-processing. Again, this is a good step in the right direction, and I'm glad to see them make controls an integral (though optional) part of the ChIP-Seq analysis.

To get into the specifics of the paper, there are several points that are worth discussing.

As a minor point, the terminology in the paper is mildly different, and doesn't seem to reflect the jargon used in the field. They describe fragment lengths in terms of shifting their positions, rather than extending the lengths, and terms like "Watson strand" and "Crick strand" are used to refer to the forward and reverse strand - I haven't heard those terms used since high school, but it's cool. (=

Feature wise, MACS implements familiar concepts such as

- duplicate filtering

- Thresholding misnamed as FDR (just like FindPeaks!)

- Peak finding that reports the peak max location

- requirement of an effective genome size

and then offers some very nice touches

- p-value cutoffs for peak reporting

- built in analysis of controls

- built in saturation calculations

All together, this makes for a great round up for a head to head competition. So guess what they do next? That's right: it's a peak finding bake-off!

For each test, MACS is compared against ChIP-Seq Peak Finder (from the Wold lab), FindPeaks (GSC) and QuEST (Valouev). And the competition is on (See Figure 2 in the paper).

Test number one (a) shows that MACS is able to keep the FDR very low for the "known set of FOXA1 binding sites" compared to the other three peak finders. This graph could either be very informative, or very confusing, as there are no details on how the number of sites for each application was chosen, who's FDR was used or otherwise. This graph could either be reporting that MACS underestimates the real FDR for it's peaks, or that the other peak finders provide overstated FDR for their peaks, or that only MACS is able to identify peaks that are true positives. MACS wins this round - though it may be by a technicality.

(Assuming that the peaks are all the same across peak callers, then all this tells us is that the peaks are assigned different FDRs by each peak caller, so I'm not even sure it's a test of any sort.)

Test number two (b) is the same thing as number one, repeated for a different binding site, and thus the same thing occurs here. I still can't tell if it's the same binding sites between peak callers, or different, making this comparison rather meaningless, except to understand the FDR biases produced by each peak caller.

Test number three (c) starts to be a little more informative. Using a subset of peaks, how many of them contain the motif of interest. With the exception of the Wold lab's Peak Finder, they all start out around 65% for the top(?) 1000 peaks, and then slowly wind their way down. By 7000 peaks, MACS stays up somewhere near 60%, FindPeaks is about 55%, and Quest never actually makes it that far, ending up with about 58% by 6000 peaks (the Wold Lab Peak finder starts at 58% and ends at about 54% at 6000 peaks as well. So, MACS seems to win this one - it's enrichment stays the best over the greatest number of peaks. This is presumably due to it's integration of controls into the application itself. Not surprisingly, Quest, which also integrates controls, ends up a close second. FindPeaks and the Wold lab Peak Finder lose here. (As a small note, one assumes that many of the peaks would be filtered out in post processing used by the Wold Lab's software and FindPeaks, but that brings in a whole separate pipeline of tools.)

The fourth test is the same as the third test, but with the NRSF application. The results are tighter, this time, and surprisingly, FindPeaks ends up doing just a little better than QuEST, depite the lack of built-in control checking .

The fifth and six test roughly parallel the last two, but this time give the spatial resolution of the motif. Like the fourth test, MACS wins again, with FindPeaks a close second for most tests, and QuEST a hot contender. But it's really hard to get excited about this one: The gap between best and worst here rarely exceeds 3 bases for FoxA1, and MACS appears to stay within 1-1.5 bases from motifs identified by FindPeaks for NRSF.

Take away message: MACS does appear to have the best application among the applications tested, while the Wold Lab Peak Finder seems to lose in all cases. Ouch. Quest and FindPeaks duke it out for second place, trading punches.

Like all battles, however, this is far from the last word on the subject. Both MACS and FindPeaks are now being developed as open source applications, which means that I expect to see future rounds of this battle being played out - and a little competition is a good thing. I know FindPeaks is pushing ahead in terms of improving FDR/thresholding, and I'm sure all of the other applications are as well.

One problem with all of these applications is that they all still assume a Poisson (or similar) model for the noise/peaks, which we're all slowly learning, isn't all it's cracked up to be. (See

ChIP-Seq in Silico.)

But, in contrast, I think we can now safely say that the low-hanging fruit of ChIP-Seq has all been picked. Four independently developed software applications have all been compared - and they all have roughly the same peaks, within a very narrow margin of error on the peak maxima.

To wrap this up, I think it's pretty clear that the next round of ChIP-Seq software will be battled out by those projects that gain some momentum and community. With the peak finders giving more or less equivalent performance (with MACS just a hair above the others for the moment) and more peak finders becoming open source, the question of which one will continue moving forwards can be viewed as a straight software problem. Which peak finder will gain the biggest and most vibrant community?

That, it seems, will probably come down to a question of programming languages.