You can probably guess what this post is about from the title - which means I still haven't gotten around to writing an entry on thresholding for ChIP-Seq. Actually, it's probably a good thing I haven't, as we've been learning a lot about thresholding in the past week. It seems many things we took for granted aren't really the case. Anyhow, I'm not going to say too much about that, as I plan to collect my thoughts and discuss it in a later entry.

Instead, I'd like to discuss the 2008 Genomics Forum, sponsored by Genome BC, which took place on Friday - though, in particular, I'm going to focus on one talk, near to my own research.

Dr. Barbara Wold from Caltech gave the first of the science talks, and focussed heavily on ChIP-Seq and Whole Transcriptome Shotgun Sequencing (WTSS). But before I get to that, I wanted to mention a few other things.

The first is that Genome BC took a few minutes to announce a really neat funding competition, which really impressed me, the Genome BC Science Opportunities Fund. (There's nothing up on the web page yet, but if you google for it, you'll come across the agenda for Friday's forum in which it's mentioned - I'm sure more will appear soon.) Its whole premise revolves around the question: "Are there experiments that we need to be doing, that are of strategic importance to the BC life science community?" I take that to mean, are there projects that we can't afford not to undertake, that we wouldn't have the funding to do otherwise? I find that to be very flexible, and very non-academic in nature - but quite neat. I hope the funding competition goes well, and I'm looking forward to seeing what they think falls into the "must do" category.

The second was the surprising demand for Bioinformaticians. I'm aware of several jobs for bioinformaticians with experience in next-gen sequencing, but the surprise to me was the number of times (5) I heard people mention that they were actively recruiting. If anyone with next-gen experience is out there looking for a job (post-doc, full time or grad student), drop me a note, and I can probably point you in the right direction.

The third was one of the afternoon talks, on journalism in science, from the perspective of traditional news paper/tv journalists. It seems so foreign to me, yet the talk touched on several interesting points, including the fact that journalists are struggling to come to terms with "new media." (... which doesn't seem particularly new to those of us who have been using the net since the 90's, but I digress.) That gave me several ideas about things I can do with my blog, to bring it out of the simple text format I use now. I guess even those of us who live/breath/sleep internet don't do a great job of harnessing it's power for communicating effectively. Food for though.

Ok... so on to the main topic of tonight's blog: Dr. Wold's talk.

Dr. Wold spoke at length on two topics, ChIP-Seq and Whole Transcriptome Shotgun Sequencing. Since these are the two subject I'm actively working on, I was obviously very interested in hearing what she has to say, though I'll comment more on the ChIP-Seq side of things.

One of the great open questions at the Genome Sciences Centre has been how to do an effective control for a ChIP-Seq experiment. It's not something we've done much of, in the past, but the Wold lab demonstrated why they're necessary, and how to do them well. It seems that ChIP-Seq experiments tend to yield fragments in several genomic regions that have nothing to do with the antibody or experiment itself. The educated guess is that these are caused by hypersensitive sites in the genome that tend to fragment in repeatable patterns, giving rise to peaks that appear in all samples. Indeed, I spend a good portion of this past week talking about observations of peaks exactly like that, and how to "filter" them out of the ChIP-Seq results. I wasn't able to get a good idea of how the Wold lab does this, other than by eye, (which isn't very high throughput), but knowing what needs to be done now, it shouldn't be particularly difficult to incorporate into our next release of the FindPeaks code.

Another smart thing that the Wold lab has done is to separate the interactions of ChIP-Seq into two different types: Type 1 and Type 2, where Type 1 refers to single molecule-DNA binding events, which give rise to sharp peaks, and very clean profiles. These tend be transcription factors like NRSF, or STAT1, upon which the first generation of ChIP-Seq papers were published. Type 2 interactomes tend to be less clear, as they are transcription factors that recruit other elements, or form complexes that bind to the DNA at specific sites, and require other proteins to bind to encourage transcription. My own interpretation is that the number of identifiable binding sites should indicate the type, and thus, if there were three identifiable transcription factor consensus sites lined up, it should be considered a Type 3 interactome, though, that may be simplifying the case tremendously, as there are, undoubtedly, many other proteins that must be recruited before any transcription will take place.

In terms of applications, the members of the wold lab have been using their identified peaks to locate novel binding site motifs. I think this is the first thing everyone thinks of when they hear of ChIP-Seq for the first time, but it's pretty cool to see it in action. (We also do it at the GSC too, I might add.) The neatest thing, however, was that they were able to identify a rather strange binding site, with two halves of a motif, split by a variable distance. I haven't quite figured out how that works, in terms of DNA/Protein structure, but it's conceptually quite neat. They were able to show that the distance between the two halves of the structure vary by 10-20 bases, making it a challenge to identify, for most traditional motif scanners. Nifty.

Another neat thing, which I think everyone knows, but was cool to hear that it's been shown is that the binding sites often line up on areas of high conservation across species. I use that as a test for my own work, but it was good to have it confirmed.

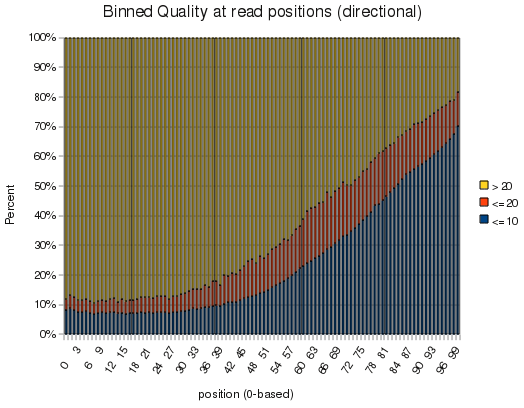

Finally, one of the things Dr. Wold mentioned was that they were interested in using the information in the directionality of reads in their analysis. Oddly enough, this was one of the first problems I worked on in ChIP-Seq, months ago, and discovered several ways to handle it. I enjoyed knowing that there's at least one thing my own ChIP-Seq code does that is unique, and possibly better than the competition. (-;

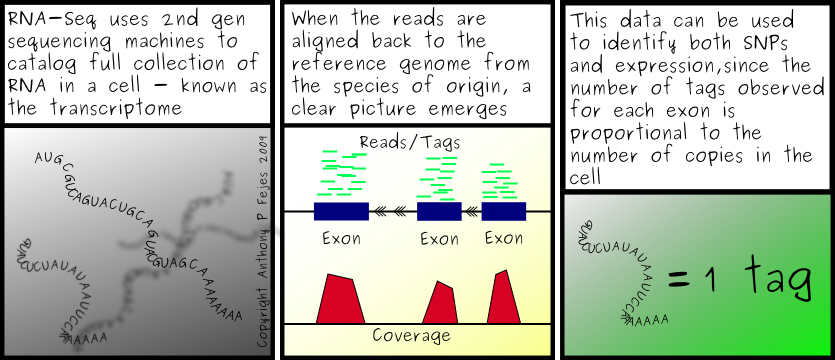

As for transcriptome work, there were only a couple things that are worth mentioning. The Wold lab seems to be using MAQ and a list of splice junctions assembled from annotated exons to map the transcriptome sequences. I've heard that before, actually, from someone at the GSC who is doing exactly the same thing. It's a small world. I'm not really a fan of the technique, however. Yes, you'll get a lot of the exon junction reads, but you'll only find the ones you're looking for, which is exactly the criticism all the next-gen people throw at the use of micro-arrays. There has got to be a better solution... but I don't yet know what it is. (We thought it was Exonerate, but we can't seem to get it to work well, due to several bugs in the software. It's clearly a work in progress.)

Anyhow, I think I'm going to stop here. I'll just sum it all up by saying it was a pretty good talk, and it's given me lots of things to think about. I'm looking forward to getting back to coding tomorrow.

Labels: Chip-Seq, Sequencing, Solexa/Illumina, Talks, transcriptome